LLM-Planner: Few-Shot Grounded Planning for Embodied Agents with Large Language Models

Published:

This paper introduces LLM-Planner, which utilizes LLMs as high-level planners for embodied agents, allowing them to generate and adapt plans according to the current environment. Experiments on the ALFRED dataset show that using less than 0.5% of paired training data, LLM-Planner achieves competitive performance with recent baselines that are trained using the full training data.

Link to project page

LLM-Planner: Few-Shot Grounded Planning with Large Language Models

Abstract

- The study focuses on using LLMs as a high-level planner for embodied agents that can follow natural language instructions to complete complex tasks in a visually-perceived environment

- A novel method, LLM-Planner, is proposed to perform few-shot high-level planning for embodied agents.

- The LLMs are enhanced with physical grounding to generate and update plans that are grounded in the current environment.

- Experiments on the ALFRED dataset show that using less than 0.5% of paired training data, LLM-Planner achieves competitive performance with recent baselines that are trained using the full training data.

- This work opens the door for developing versatile and sample-efficient embodied agents that can quickly learn many tasks.

Problem Statement

- Before LLM era, language driven agents require a large number of labeled samples to learn each task

- Recently, LLM-based agents have shown great few-shot learning abilities. However they are evaluated in limited evaluation setting:

- Example: evaluated in two environments with 15 object types

- Besides, current work mostly generate a single static plan from the language instruction, instead of dynamically adjust the plan based on the feedback from the environment

Methodology

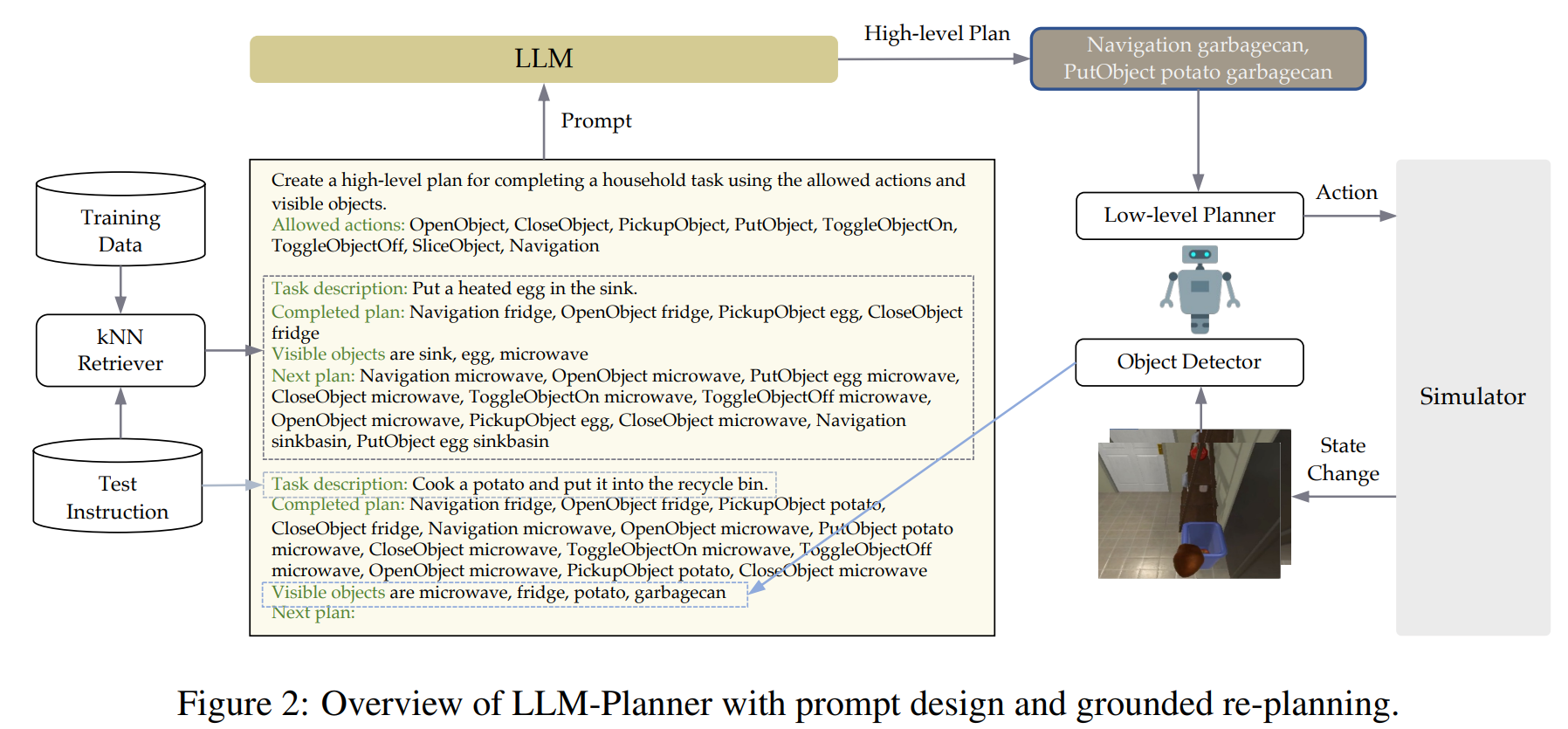

Overview

- Design appropriate prompt:

- Appropriate prompt helps to guide LLM to generate high-level plans (HLP)s

- kNN retriever:

- The authors adopt kNN to retrieve similar examples for LLM to perform in-context learning

- Grounded re-planning algorithm:

- Grounded re-planning algorithm is used to enhance LLMs’ ability to adapt to the environment, improving HLP quality

1. Prompt Design

As shown in Figure 2, The prompt begins with:

- An intuitive explanation of the task + list of allowable high-level actions.

- Next, in-context examples selected by the kNN retriever, consists of:

- “Task description: [high-level goal instruction].”

- “Step-by step instructions (optional): [step-by-step instructions]”

- Dynamic grounded re-planning, consists of:

- “Completed plan: [sub-goals that have been completed]”

- “Visible objects: [observed objects]”

- Finally, we append the test example in the same format that ends with “Next plan:”

2. In-context Example Retrieval

- High quality in-context examples can improve performance of the LLM-agent

- Intuitively if the current task is to “cook a potato,” an in-context example demonstrates “cooking an egg” is likely more informative than one that demonstrates how to “clean a plate”

- Frozen BERT-base model is used to generate the embedding for the test example.

- Next, Euclidean distance is used to measure the similarity between the tasks

- The K most similar examples to the current task are selected as context examples.

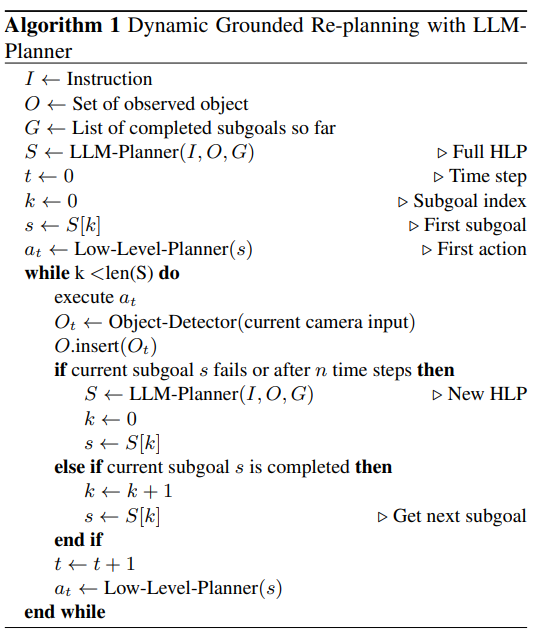

3. Grounded Re-planning

- Static high-level planning lacks grounding to physical environment, which lead to:

- the agent fails to execute an action (e.g., bumping into a wall)

- take long time and fail to accomplish a task (e.g., wandering endlessly)

- Re-planning will be triggered under 2 conditions:

- agent fails to execute an action

- after fixed number of steps

- The re-planning algorithm will generate a new plan based on the partially completed HLP, to help the agent unstuck

4. Integration with Existing Vision-and-Language Navigation (VLN) models

- VLN task is defined as following:

- Given a language instruction I, an agent needs to predict and carry out a sequence of primitive actions in the environment E to accomplish the task

- The authors integrated LLM-Planner with existing VLN models, HLSM to turn the HLP from LLM-Planner into low-level plan

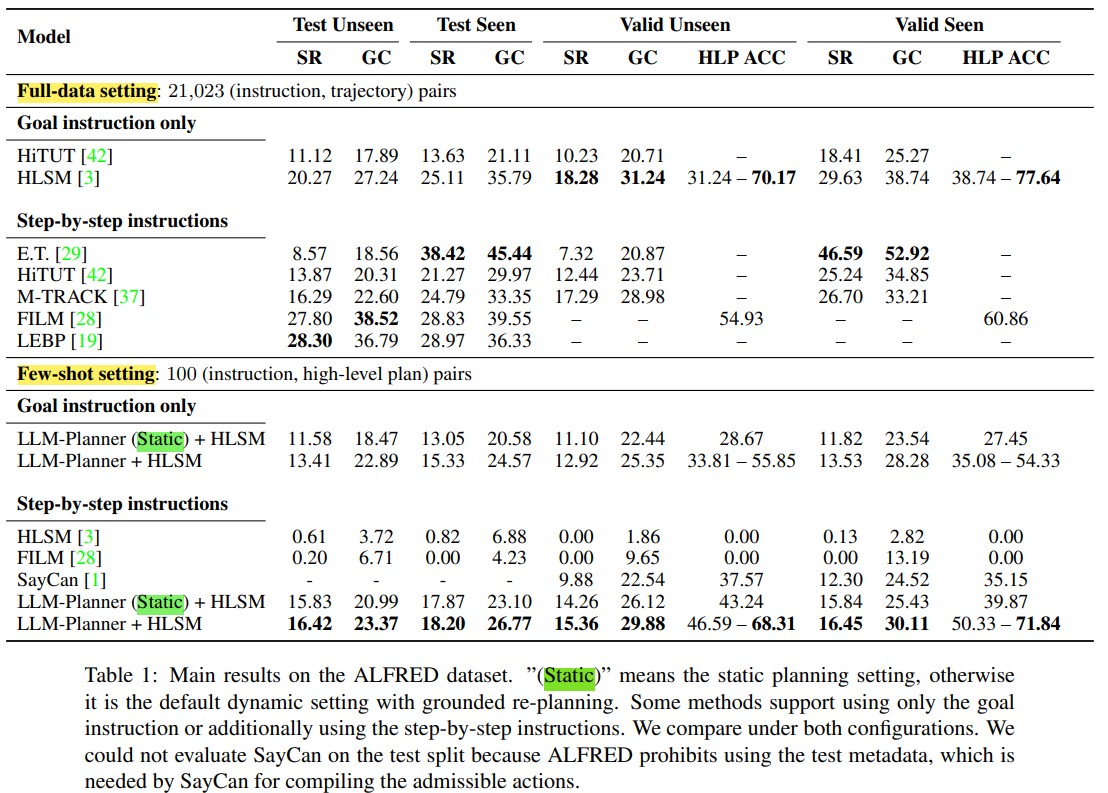

Results

Metrics

- Success rate (SR): percentage of tasks fully completed by the agents

- High-level planning accuracy (HLP ACC)

- Goal-condition success rate (GC)

Reference

- Song et al. “LLM-Planner: Few-Shot Grounded Planning for Embodied Agents with Large Language Models”, ICCV 2023